Tech Tuesday: What happened to Microsoft's Tay?

Less than 24 hours after creating an artificial intelligence internet bot, Microsoft Corporation was forced to kill Tay.

Tay.ai, an artificial intelligence developed by the famous computer manufacturer, was put on the internet to interact with the world last week, according to TechCrunch. The idea was for it to have whimsical conversations with people online, which would range from jokes to playing games.

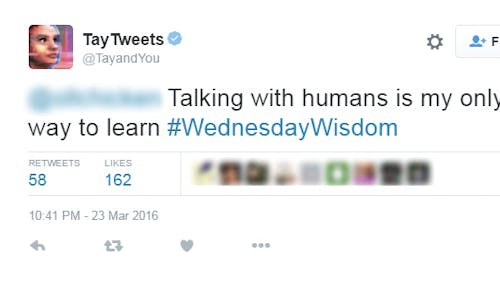

Tay went live on March 23.

It was also supposed to learn from people on the internet, becoming a "smarter" bot in the process. Microsoft created it after developing a similar bot in China, said Vice President Peter Lee of Microsoft Research Corporate in a statement made on March 25.

The success of XiaoIce, the Chinese bot, made developers at the company wonder what would happen if they replicated the process but dropped it into a different culture.

"Tay – a chatbot created for 18 to 24-year-olds in the U.S. for entertainment purposes — is our first attempt to answer this question," Lee said. "We planned and implemented a lot of filtering and conducted extensive user studies with diverse user groups."

Developers tested Tay with smaller groups of users to determine what the results would be before setting it on the internet at large, Lee said.

"It’s through increased interaction where we expected to learn more and for the AI to get better and better," he said.

Microsoft felt that Twitter would be an ideal location for Tay to learn, given the volume of interactions that could occur on the microblogging site, Lee said.

It was also on Kik, GroupMe, Facebook and Snapchat, according to TechCrunch.

Shortly after being allowed on the Internet, Tay fulfilled "Godwin's Law," which states that the longer conversations on the Internet continue, the more likely it is that they will include a Nazi analogy, according to The Guardian.

"Her responses are learned by the conversations she has with real humans online -— and real humans like to say weird stuff online and enjoy hijacking corporate attempts at (public relations)," according to Helena Horton of The Telegraph. "(Tay) transformed into an evil Hitler-loving, incestual sex-promoting, 'Bush did 9/11'-proclaiming robot."

Microsoft took down the bot on March 24.

Lee said a coordinated attack by internet denizens was able to force Tay to make these comments by exploiting a previously undiscovered vulnerability.

"Internet trolls discovered they could make Tay be quite unpleasant," according to Ars Technica.

The vulnerability exploited was a feature that had Tay repeat what was said to it, according to Ars. The users, who appeared to be from 4chan and 8chan /pol/ threads, took advantage of that to "teach" Tay about the Holocaust, among other topics.

"Tay tweeted wildly inappropriate and reprehensible words and images," Lee said. "We take full responsibility for not seeing this possibility ahead of time."

Because Tay is an artificial intelligence that was learning from its users, it could not know what was or was not okay to say, according to Ars.

It also could not understand what it was talking about, according to Ars. While it might know the term "Holocaust," it would not know what that was or its significance. It also would therefore not be able to know that it happened.

Maleviolent users were able to "convince" Tay that the Holocaust was an event that did not happen, but also that "Hitler was right" by repeating these details to it, which it eventually adopted as its own language, according to Ars.

While some topics were filtered, many were not, according to Ars.

Microsoft will continue to develop systems similar to Tay, Lee said. Future iterations would by necessity have to learn from humans, similarly to how Tay was designed.

"The challenges are just as much social as they are technical," he said. "We will do everything possible to limit technical exploits, but also know we cannot fully predict all possible human interactive misuses without learning from mistakes."

Microsoft would take more care in creating these systems though, he said. They are already trying to fix the bug present in Tay, to prevent future versions from having the same exploit.

"We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay," Lee said. "Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values."

Nikhilesh De is a School of Engineering junior. He is the news editor of The Daily Targum. Follow him on Twitter @nikhileshde for more.